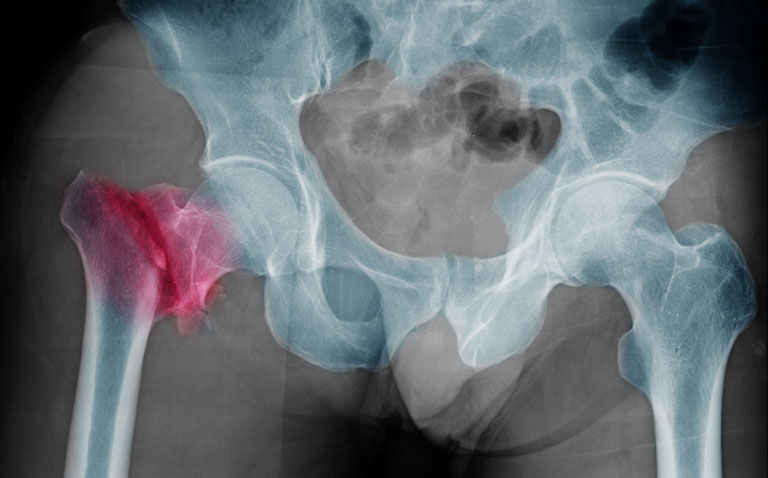

A systemic review has found that an AI model provides similar diagnostic ability for hip fractures to that of expert radiologists.

In a systemic review and meta-analysis, Canadian researchers found that the performance of an artificial intelligence (AI) model for the diagnosis of hip fractures was comparable with that of expert radiologists and surgeons.

Artificial intelligence (AI) models being increasing used for a range of healthcare applications, although the evidence for a beneficial effect on clinician diagnostic performance is spare.

In contrast, models based on deep learning algorithms offer some promise for diagnostic purposes with findings to date suggesting that the diagnostic performance of such systems are equivalent to that of health-care professionals.

With hip fractures associated with a huge morbidity and mortality, how useful is an AI model (AIM) for the automatic identification and classification of hip fractures and how does this compare with clinicians were the questions addressed by researchers in the current study.

The team performed a systematic review of the literature and included studies that involved the development of machine learning models for the diagnosis of hip fractures from hip or pelvic radiographs or to predict any postoperative patient outcome following hip fracture surgery.

The team examined the diagnostic accuracy of an AIM in comparison to expert clinicians and used the areas under the curve (AUC) for postoperative outcome prediction such as mortality between traditional statistical models and that developed by the machine learning models.

AI model and hip fracture diagnosis

A total of 39 studies were included, of which 46.2% used an AIM to diagnose hip fractures on plain radiographs and 53.8% used an AIM to predict patient outcomes following hip fracture surgery.

When compared with clinicians, the odds ratio for diagnostic error of the AI models was 0.79 (95% CI, 0.48 – 1.31 p = 0.36) for hip fracture radiographs. In other words, although the analysis favoured an AIM, statistically, models were no better than clinicians.

In addition, the mean sensitivity for the model was 89.3% and the specificity 87.5% and the F1 score (which that assesses the model’s accuracy) was 0.90 (range 0 to 1.0).

For post-operative predictions, e.g., such as mortality, the mean AUC was 0.84 with AI models and 0.79 for alternative controls and therefore not significantly different (p = 0.09).

The authors concluded that while promising for the diagnosis of hip fractures, the performance of AI models was comparable with that of expert radiologists and surgeons, adding that AI outcome prediction appears to provide no substantial benefit over traditional multivariable predictive statistics.

Citation

Lex JR et al. Artificial Intelligence for Hip Fracture Detection and Outcome Prediction: A Systematic Review and Meta-analysis. JAMA Netw Open 2023.